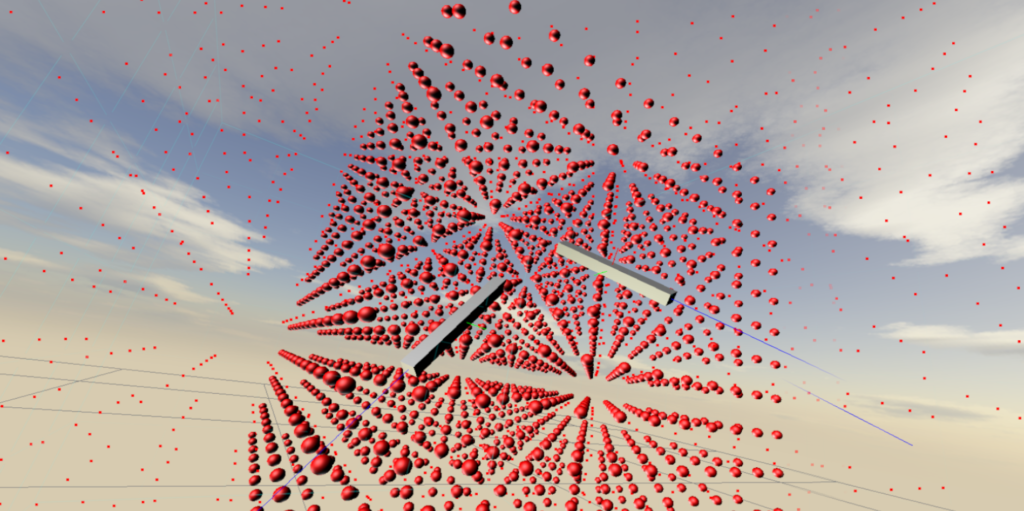

Here are some mock ups for a guitar quartet I’m working on that uses a motorized, star-shaped guitar pick instrument. The motors allow for tight synchronization and rhythmic coordination within the ensemble, generating slowing evolving phase-shifted textures and polyrhythms.

Category: Projects

-

Synth Instruments

SynthBox and MIDI_Wand are two instruments I built using the teensy microprocessor platform and the wonderful audio library that goes with it. SynthBox uses the teensy 4.1, which can power an external MIDI keyboard using a built-in battery and the USB host library. In addition to the MIDI keyboard, it can be played using OSC over a WiFi network using the added ATWINC1500 chip.

MIDI_Wand is a MIDI controller (and FM synth) that uses an IMU sensor (pitch/roll/yaw) to generate melodies. Buttons add simple control of the melodies and allow you to change the type of scale.

-

MUSI 425: Final Presentations

Students presented their final projects at the Off Broadway Theater at Yale University. Work included original audio/visual composition, interactive installations, and algorithmic composition/performance. All of the projects were presented on the theater’s 10’x36′ LED video wall. Congratulations to all who participated!

-

Instrument Design Final Projects

Here are some highlight from ENAS 344 / MUSI 415: Musical Acoustics and Instrument Design final project presentations, Spring 2021.

-

Imitative Counterpoint

Here is a short article I wrote about my work with Virtual Reality, and collaborations with game designer Johan Warren.

Here is a short article I wrote about my work with Virtual Reality, and collaborations with game designer Johan Warren.Here is a short video demonstration of the game.

-

Creepy Robot

My work in electronic music and instrument building often involves aspects of writing – or hacking apart – computer code, and programming small embedded microprocessors. This kinetic sculpture is the result of experiments in controlling robotic movement and deep learning algorithms in computer vision.

The piece uses a camera coupled to a computer vision algorithm that identifies the most conspicuous, attention grabbing element in the visual field (Itti et al., IEEE PAMI, 1998). Camera input is converted into five parallel streams according to color, intensity, motion, orientation, and flicker, which are visible at the bottom left of the screen. These streams are then weighted and fed into a neural network to generate a saliency map, visible at the right-side of the screen. The robot arm is then programmed to move towards the most salient object, identified by the green ring on the screen on the left

This simple coupling – computer vision and movement – generates eerily life-like behaviors that are often delightfully unpredictable.

below is some documentation of early prototypes

-

Yale Laptop Ensemble

We had a fantastic – and big! – group of students in this year’s Laptop Ensemble. You can visit the class website here for more information, and watch a few videos of rehearsals and the final presentations below.

-

Musical Acoustics and Instrument Design

Dr. Larry Wilen and I co-teach a course in musical acoustics and instrument design in Yale’s Center for Engineering Innovation and Design. Here’s a brief video from a story WNPR produced on the class.

-

Sprout Instrument

I have been working with a team of undergraduate students to design an interface for music production and sound synthesis using the HP’s immersive computing platform called the Sprout. We have been using the depth camera to identify and segment physical objects in the workspace, and then use the downward-facing projector to map animations onto the surface of the objects. This project is part of the applied research grant, Blended Reality, with is co-funded by HP and Yale ITS.